Understanding and Empowering Disabled Tech Users: Professor Jonggi Hong

Stevens computer science professor Jonggi Hong didn’t set out to change the world.

But he just might do it anyway.

“As I kid, in South Korea, I loved playing video games,” he laughs. “That led me naturally to wanting to know how to program and design video games, which led me to study computer science.”

But along the way, he found a new research focus — and a new passion for helping people with disabilities.

Developing disability tech alongside the users

Speech recognition, auto-translation and image-recognition AI have gone mainstream. Our phones can now predict, with quite high accuracy, what we’re seeing and what people are saying.

But for blind, deaf and other disabled users, these tools don’t always work perfectly — and even small errors can be dangerous.

“As sighted users, most of us don’t think about this much,” says Hong. “But a blind person’s experience of an iPhone is very different from mine."

He came to that realization after completing bachelor’s and master’s degrees at KAIST (Korea Advanced Institute of Science & Technology) and beginning doctoral studies at the University of Maryland — where he joined a research group that happened to be exploring ways to use the new tools of AI to help blind and other tech users better use devices and technologies.

During the course of those Ph.D. studies, Hong would develop improved screens for smart watches (including one called SplitBoard that toggles between two half-screens as you flick your wrist); build wrist-based haptic devices; and develop accessible user interfaces for AI systems such as speech recognition and object recognition, all to improve disabled users’ experience of wearable devices and applications.

Following graduation, he worked remotely as an intern on Microsoft’s Ability Team during the COVID-19 pandemic, helping build a system that detects a camera’s image frame and could assist blind users in reorienting their cameras or devices to get better photographs.

Later he moved to San Francisco to work with the nonprofit Smith-Kettlewell Eye Research Institute as a postdoctoral researcher under the direction of James Coughlan, a leading scientist exploring questions around AI and blind users.

There, Hong built and analyzed novel machine-learning applications to improve computer vision systems for blind navigation, among other projects, before accepting his new role with Stevens.

“The Smith-Kettlewell Institute has been a great place for my research,” he says. “I have interacted with computer scientists, but also with people that have many other different kinds of expertise such as doctors and neuroscientists.”

Hong believes there are gaps between evolving AI technologies, which are racing ahead, and the needs of blind people and other disabled users.

“One of the gaps I have noticed,” he says, “is that most or all machine-learning datasets are built by sighted people — even sets meant to develop services for blind people — even though the real-world experiences of and data from blind people are very different from those of sighted people.”

If a computer system makes mistakes identifying an object in the street, a web page, a newspaper or a photo, for instance, “it is much harder for blind people to deal with those kinds of errors. They really depend on all these automatic decisions made by software and systems, and even tend to over-trust them.”

Even when image captions are obviously in error to a sighted user, a blind user may have little or no sense a description is slightly or wildly inaccurate.

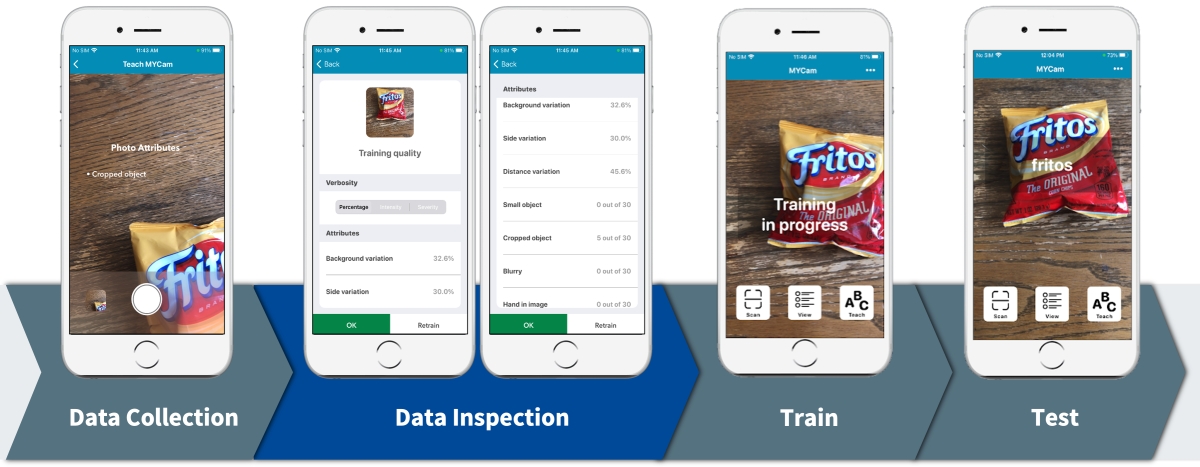

“Now we are starting to develop and test some systems where blind users can explore images,” says Hong, “by touching their screens and judge whether an auto-generated caption is correct or not; give feedback; and help correct those kinds of labeling errors,” he says.

It’s vital, he adds, to test new systems with the groups of people for whom they’re ultimately intended.

“Some people think we can test systems for the blind using sighted people who are blindfolded,” Hong cautions. “We shouldn’t do that. You need to understand the unique challenges blind people have by giving them the technologies to test them.”

“If I build a system for blind people, my studies always use blind subjects to test them.”

Promising new research directions

Now, as he returns to the East Coast to begin a new career at Stevens, Hong enthuses about the hilltop campus’ Manhattan views — “I didn’t know that about Stevens before I visited!” — as well as its research strengths.

“One of the things that was attractive to me here was that Stevens faculty were already talking about future research directions that I, too, have been thinking about pursuing,” he notes. “So I was very happy to receive the offer to come teach here.”

At Stevens, programming mainly in Apple’s Swift language — for its utility with iPhone and iPad devices — as well as Python, Java, Android, C+ and even R, Hong will continue to develop novel research in areas including computer vision, both for users with and without disabilities, as well as personalizable AI systems that users could one day train to adapt to their own individual movements, speech patterns or other personal characteristics.

He also plans to reach out and connect with local disability communities.

“It is always a challenge to recruit blind people for user studies in this research,” he points out. “When I get to Stevens, I’d like to have a good partnership with the blind community. I think that would be not only beneficial for my own research, but for Stevens and for the local community.”

And he’s looking forward to teaching for the first time.

“That will be a fun challenge,” he concludes. “Getting back in the classroom, this time as a professor.”